Why Run A/B Tests?

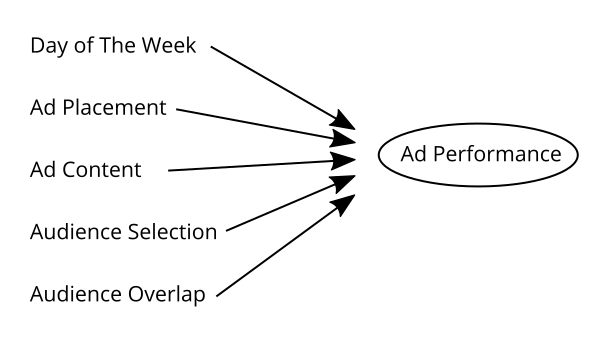

Ad platforms adjust many features of a campaign (placement, format, detailed targeting, etc.). So when running different ads, it is very hard to tell the cause of a performance difference.

A/B tests (randomized controlled experiments) help you handle these many changes and discover what matters to your ad's performance.

Marketing content matters

In 2017 Nielson released a big study of what matters in marketing. They found:

"...creative remains the undisputed champ in terms of sales drivers..."

Some content produces interest, and clicks, many times faster than others. With competition in online advertising only getting stronger, these content differences can determine whether an ad is a profitable engine of growth or a money pit.

Finding the right content is hard

Though I've had the privilege to work with some truly gifted marketers and designers, I have yet to meet anyone who can predict what will work before trying it.

But, even trying creative is challenging. When we run two ads, the ad platforms adapt many things and create many differences. Perhaps ads receive impressions at different times. Perhaps the ads get different placements on the platforms, which has a notable impact on clicks. Maybe the audience that sees the ads are different. All of these changes affect performance.

When many things change at once, it takes a lot more work to understand the impact of each component.

Experiments to the rescue

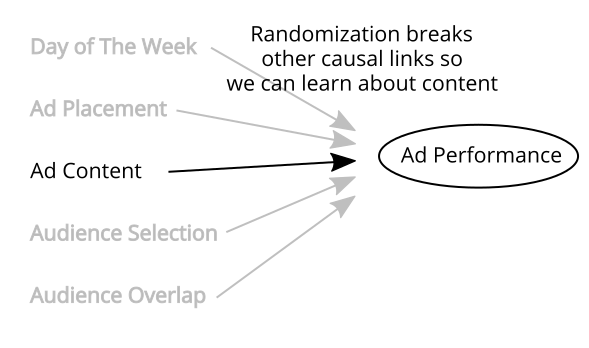

To understand what we should do to get the best performance, we need a way to limit the influence of some factors. Here is where the randomization in an A/B test really helps. Randomization allows us to break some causal connections, while also allowing us to measure our certainty when estimating others.

Conclusion

Using experiments well is like finally getting the right glasses. The world comes into focus. This allows us to adapt our ads and better understand what our customers want.